Grid+

In my previous post I stated I would try to learn more about token models and the associated technology by investigating crypto companies. First up a company called Grid+.

Grid+ aims to be a utility provider that exposes its customers to wholesale electricity prices. I really like their blog posts, they put a lot of effort into explaining the thought process behind the company. One of the co-founders, Alex Miller, actually encourages criticism and I think his transparency is refreshing. They’re one of the few companies who actually go into details about how blockchain technology can be used in a real life business and reading their posts really helps to develop my understanding of this technology.

Grid+ Value

First up it’s useful to look at what value Grid+ can potentially bring to a customer.

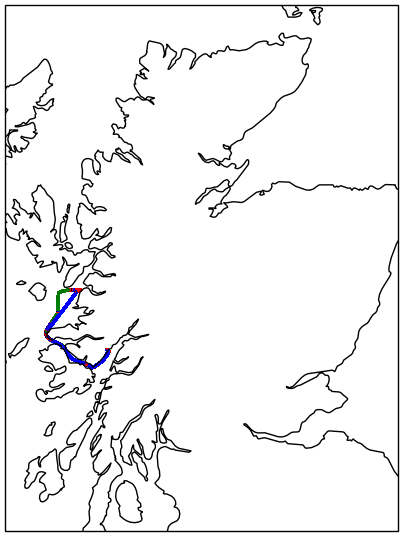

Grid+ want to expose its customers to wholesale electricity prices. Traditional utilities use fixed prices which are not market driven but wholesale prices vary throughout the day. Grid+ will offer prices both for consumed and generated energy based on the wholesale price (with a relatively small markup for most customers). This means customers have the opportunity to buy electricity when it is cheap, store it in batteries and sell when the price goes up. To me that’s pretty cool by itself.

The plan is to operate using the existing grid infrastructure. In theory thats a smart way to save costs, especially to start-up. From the description of how the system will work (see below) I wonder if this might potentially restrict them.

They place a lot of emphasis on giving their users ‘agency‘ which means users control the sending of funds, etc. In this system there is minimal human administration and minimal credit risk. Ultimately this keeps the price low for customers by avoiding traditional costs associated with admin, etc.

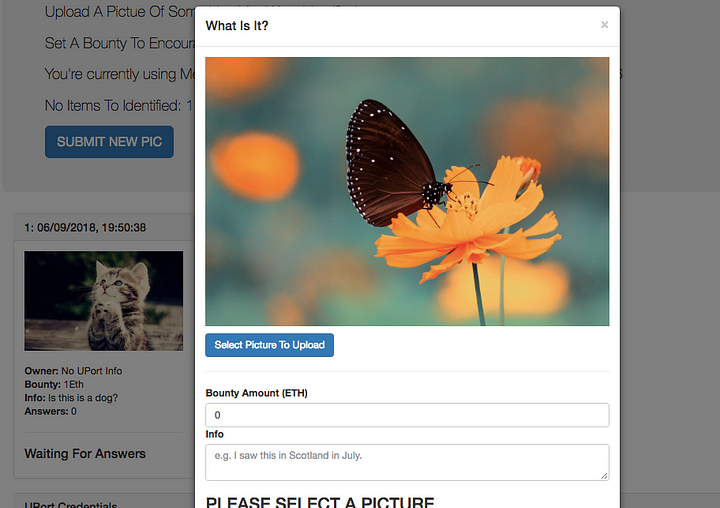

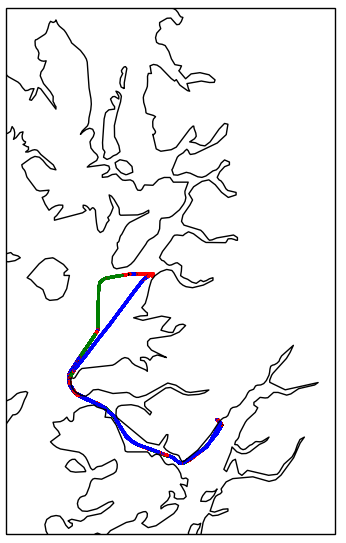

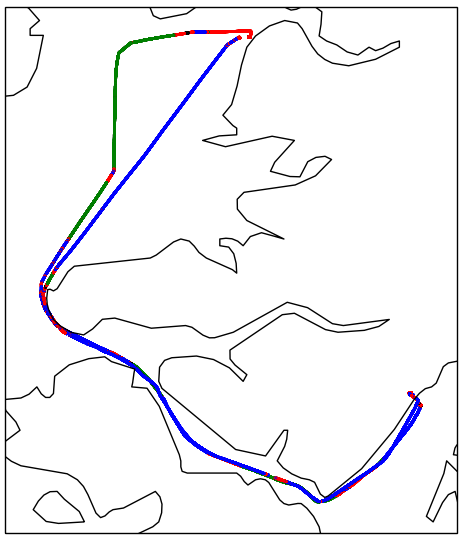

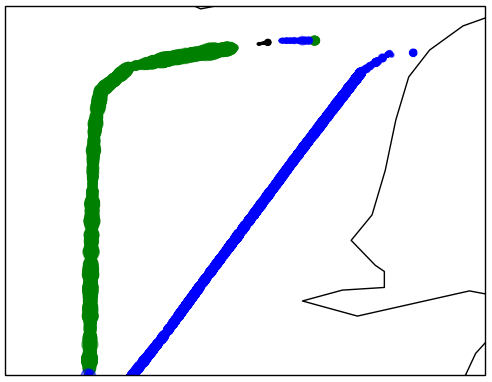

An IoT ‘Agent’ device is used to automatically handle payments via the Ethereum network. The goal is to create a p2p market between Grid+ agents. A customer who has electricity to sell can offer it at a local rate lower than the nearest wholesale rate. They will get bought out by another customer with the two customer Agents handling payments via a Grid+ hub on the Raiden network.

Grid+ acts as the bridge between the old world of traditional energy suppliers and the new world of prosumers and p2p transactions.

They also have ambitions to make the Agent ‘smart’ to help the customer purchase the cheapest electricity possible. The Agent can be connected to other sensors such as thermostats, it will then predict energy usage and save money on energy purchasing.

Ultimately these are all potentially smart ways to provide cheaper electricity to the customer.

Tokens

Grid+ Tokens

Grid+ will operate with a two-token model, a BOLT token and a GRID token.

The BOLT token will be treated by Grid+ as a stable-coin, redeemable by Grid+ customers for $1 worth of energy from Grid+ and backed by USD deposits.

The GRID token will allow Grid+ customers to purchase electricity from Grid+ at wholesale price. 1 GRID token = 500 kWh at the wholesale price.

Token Evaluation

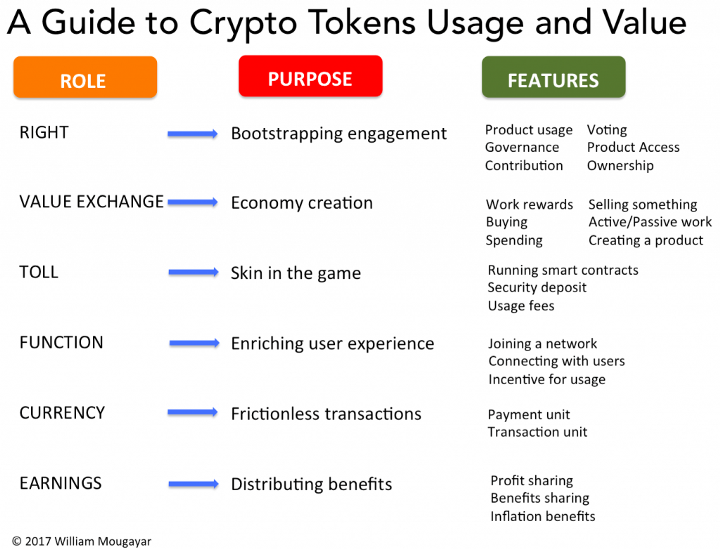

Working through the twenty questions proposed by William Mougayar in Tokenomics — A Business Guide to Token Usage, Utility and Value really helps to think about how the tokens are used:

| Question | BOLT | GRID |

| Is the token tied to a product usage, i.e. does it give the user exclusive access to it, or provide interaction rights to the product? | Yes

Provides access to Grid+ energy. | Yes

1 GRID token = 500 kWh at the wholesale price. |

| Does the token grant a governance action, like voting on a consensus related or other decision-making factor? | No | No |

| Does the token enable the user to contribute to a value-adding action for the network or market that is being built? | Yes

Selling generated electricity on a p2p network. | No |

| Does the token grant an ownership of sorts, whether it is real or a proxy to a value? | Yes

Ownership of energy. | Yes

Ownership of wholesale energy. |

| Does the token result in a monetizable reward based on an action by the user (active work)? | Yes

Any revenue earned by a customer from selling electricity is earned in BOLT tokens instead of fiat and is stored on the Agent. | No |

| Does the token grant the user a value based on sharing or disclosing some data about them (passive work)? | No | No |

| Is buying something part of the business model? | Yes | Yes |

| Is selling something part of the business model? | Yes | No |

| Can users create a new product or service? | No | No |

| Is the token required to run a smart contract or to fund an oracle? | No | No |

| Is the token required as a security deposit to secure some aspect of the blockchain’s operation? | No | No |

| Is the token (or a derivative of it, like a stable coin or gas unit) used to pay for some usage? | Yes

Pay for used electricity. | No |

| Is the token required to join a network or other related entity? | Yes

Can't join Grid+ network without token. | No |

| Does the token enable a real connection between users? | Yes?

Allows p2p payments between users. | No |

| Is the token given away or offered at a discount, as an incentive to encourage product trial or usage? | No | Yes

I think it incentivises people to start using Grid+. |

| Is the token your principal payment unit, essentially functioning as an internal currency? | Yes | No |

| Is the token (or derivative of it) the principal accounting unit for all internal transactions? | I think this is a yes although it may be kW instead. | No |

| Does your blockchain autonomously distribute profits to token holders? | Yes

Agents are automatically updated. | No |

| Does your blockchain autonomously distribute other benefits to token holders? | Yes

Possibly Casper, etc? | No |

| Is there a related benefit to your users, resulting from built-in currency inflation? | No | No |

| Total | 13/20 | 4/20 |

Which leads to the conclusion that the tokens offer the following utility:

The Value Exchange

The BOLT token is a unit of value that allows the buying and selling of energy between Grid+ and users and also user to user.

Users can also earn BOLTS by generating and selling electricity or by arbitrage.

The Toll

It’s obvious that the BOLT token is used to pay for electricity used but I feel like I’m not 100% on this one. Maybe it’s because a token isn’t essential for this function. In the User Agency post Alex does admits this part could be done using a traditional infrastructure.

The Function

As far as I can see the GRID token is mainly for. It is offering a discount that encourages users to use Grid+ services.

The Currency

Traditional payment processing fees and admin costs are reduced.

p2p payments are made economically and technologically viable.

Conclusions

I’m not sure if 13/20 is considered a high score but my feelings are the BOLT token utility more than proves itself essential to the business model.

Initially I was sceptical about the use of the GRID token but after working through the questions I can see it’s potentially a really nice way to incentivise people to start using the service.

There are also some other interesting opportunities described in the post Casper, Plasma, and the Grid+ Agent that demonstrates the Grid+ team are super innovative when it comes to using blockchain technology and are still exploring new ideas – it must be a cool place to work! I think the last paragraph from Alex Millers The P2P Grid We All Want post demonstrates this:

However, this brainstorming session is likely an exercise in futility because the future is always uncertain and we’re talking about a future that is likely a decade or more away. Nevertheless, we at Grid+ are primarily motivated by bringing forth the future of energy. If Grid+ is so successful that our customers eventually dis-intermediate us and we really have no way to make money, then perhaps we’ve accomplished what we set out to do. We as a team would be happy with an outcome that empowers the people and establishes a new, transactive grid because that would mean we will have proven the power of decentralizing technologies — as far as we’re concerned, that’s a win for humanity.

How Grid+ Will Work

The following isn’t actually that relative from the token model perspective but it’s really interesting to see the nuts and bolts of how a crypto company could function. Really cool of the Grid+ to share this.

A customer purchases an agent (a small device capable of making signatures) and claims that device based on a serial number printed on the box.

Before shipping the agent Grid+ whitelist its serial number on their registry contract.

Grid+ map that serial number to an Ethereum address, which is a function of its public key .

Agent Address – Created when device first boots up.

Owner Address – customer who enters the serial number printed on the device into the Grid+ web console.

Customers will make a refundable initial deposit then will prepay each month.

Fiat payments will be converted to USDX tokens.

Every few hours Grid+ request payment from a customers agent commensurate with the amount of energy used.

If the customer exceeds her monthly allowance (or her agent simply doesn’t pay), the customer will be notified deductions are made from her initial account deposit.

your utility company tracks how much power goes into your house and how much power goes out of your house and then sends you a bill based on the net result.

Smart meters send data to ISO. ISO makes data available via API.

Grid+ will query the ISO (ERCOT in Texas) and bill customers’ Agents based on that usage.