Introduction

This is a write up for my entry to the EthGlobal Autonomous Worlds hackathon, the imaginatively titled: MUD Powered Balancer Swaps. (Github)

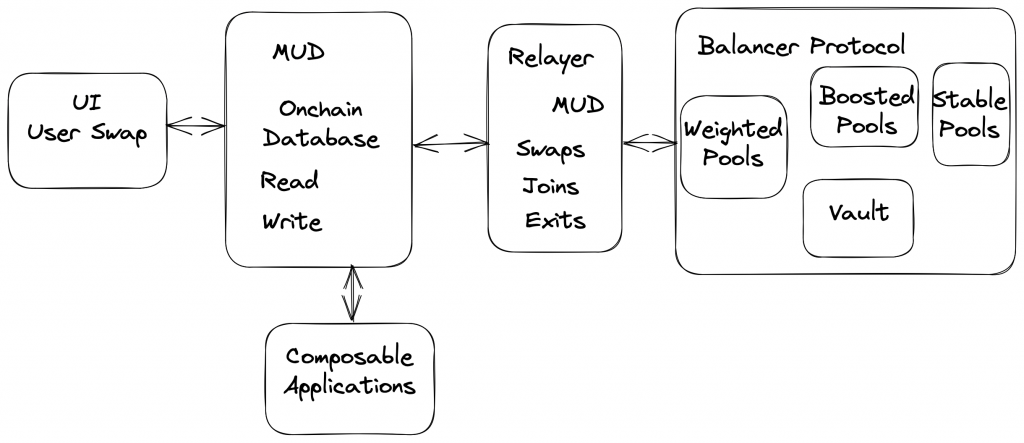

Unfortunately I have no game development skills so the idea was to see how MUD could be used with an existing DeFi protocol. In this case by creating a new Balancer Relayer that was integrated with MUD and a front end to show swap data.

From the MUD docs: “MUD is a framework for ambitious Ethereum applications. It compresses the complexity of building EVM apps with a tightly integrated software stack.” The stand out for me is:

Link

No indexers or subgraphs needed, and your frontend is magically synchronized!

Since my early days at Balancer the Subgraph has been one of the main pain points I’ve come across. I’ve always thought there’s a clear need/opportunity for a better way of doing things. When I first saw the DevCon videos showing how the MUD framework worked it reminded me of the early days of the Meteor framework which was seemed like magical frontend/backend sync technology when I first saw it. With MUD we also get the whole decentralised/composability aspect too. It really seems like this could be a challenger and the hackathons a perfect way to get some experience hacking on it!

Solution Overview

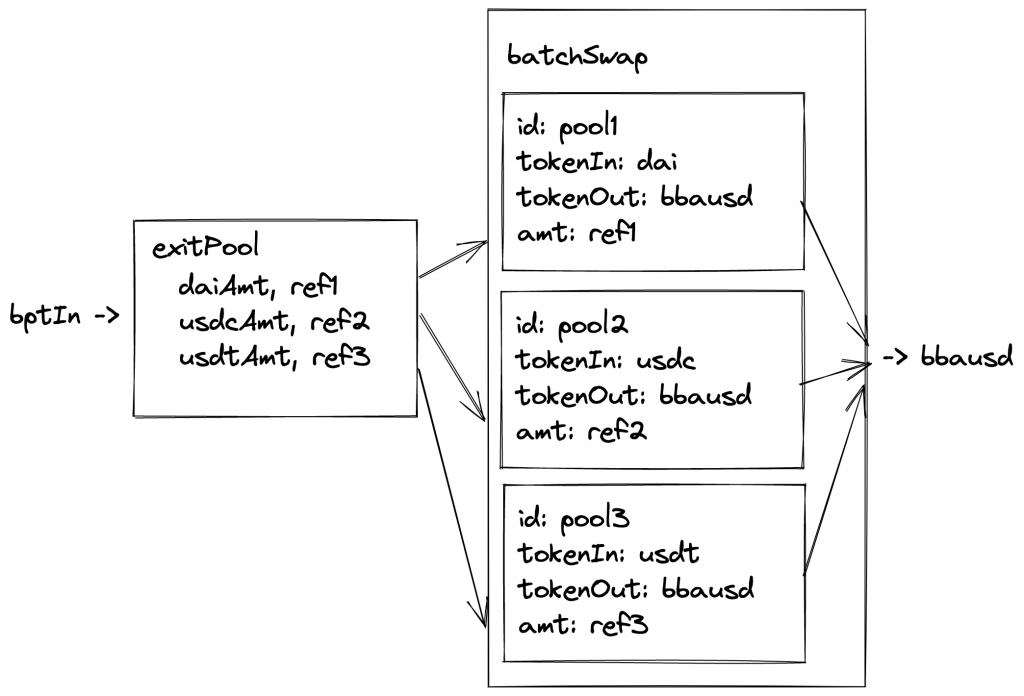

Balancer Relayers are contracts that allow users to make calls to the Balancer Vault on behalf of the users. They can use the sender’s ERC20 vault allowance, internal balance, and BPTs on their behalf. As I’ve written before, multiple actions such as exit/join pools, swaps, etc can be chained together, improving the UX.

It’s important to note that because the Relayers have permissions over user funds they have to be authorized by the protocol. This authorisation is handled by Balancer Gorvernance and you can see a past governance proposal and authorisation PR here and here.

The MUD Store is the onchain database that can be written and read from similar to a normal database. The MUD framework handles all the complexity and makes developing with the Store super smooth.

By developing a new MUD enabled Relayer we can use a well established, battle tested Balancer protocol (Balancer 80/20 pools in particular could be interesting as liquidity for gaming assets) combined with all the benefits the MUD framework offers.

The How

Mainnet Forking

By using a local node forked from mainnet we can use all the deployed Balancer info including the real pools, assets and governance setup. To build this into the dev setup based off the MUD template project I added a .env with a mainnet archive from Alchemy and edited the root package.json` node script like so:

"node": "anvil -b 1 --block-base-fee-per-gas 0 --chain-id 31337 --fork-block-number 17295542 -f $(. ./.env && echo $ALCHEMY_URL)"Now when the pnpm dev command is run it spins up a forked version of mainnet (with a chainId of 31337 which makes everything else keep working) and all the associated MUD contracts used during the normal dev process will be deployed there for use.

Relayer With MUD

The most recent Balancer Relayer V5 code can be found here. In the Hackathon spirit I decided to develop a very simple (and unsafe) version (I initially tried replicating the Relayer/Library/Multicall approach used by Balancer but had issues with proxy permissions on the store that I didn’t have time to solve). It allows a user to execute a singleSwap. The complete code is shown below:

import { System } from "@latticexyz/world/src/System.sol";

import { Swap } from "../codegen/Tables.sol";

import { IVault } from "@balancer-labs/v2-interfaces/contracts/vault/IVault.sol";

import "@balancer-labs/v2-interfaces/contracts/standalone-utils/IBalancerRelayer.sol";

contract RelayerSystem is System {

IVault private immutable _vault;

constructor() {

_vault = IVault(address(0xBA12222222228d8Ba445958a75a0704d566BF2C8));

}

function getVault() public view returns (IVault) {

return _vault;

}

function swap(

IVault.SingleSwap memory singleSwap,

IVault.FundManagement calldata funds,

uint256 limit,

uint256 deadline,

uint256 value

) external payable returns (uint256) {

require(funds.sender == msg.sender || funds.sender == address(this), "Incorrect sender");

uint256 result = getVault().swap{ value: value }(singleSwap, funds, limit, deadline);

bytes32 key = bytes32(abi.encodePacked(block.number, msg.sender, gasleft()));

Swap.set(key, address(singleSwap.assetIn), address(singleSwap.assetOut), singleSwap.amount, result);

return result;

}

}I think the simplicity of the code snippet really demonstrates the ease of development using MUD. By simply inheriting from the MUD System I can read and write to the MUD Store. In this case I want to write the assetIn, assetOut, amount and result for the trade being executed into the Swap table in the store where it can be consumed by whoever (see the Front End section below to see how). I do this in:

Swap.set(key, address(singleSwap.assetIn), address(singleSwap.assetOut), singleSwap.amount, result);To setup the Swap table all I have to do is edit the mud.config.ts file to look like:

export default mudConfig({

tables: {

Swap: {

schema: {

assetIn: "address",

assetOut: "address",

amount: "uint256",

amountReturned: "uint256"

}

}

},

});The rest (including deployment, etc) is all taken care of by the framework 👏

Permissions

Before I can execute swaps, etc there is some housekeeping to take care of. Any Balancer Relayer must be granted permission via Governance before it can be used with the Vault. In practice this means that the Authoriser grantRoles(roles, relayer) `function must be called from a Governance address. By checking out previous governance actions we can see the DAO Multisig has previously been used to grant roles to relayers. Using hardhat_impersonateAccount on our fork we can send the transaction as if it was from the DAO and grant the required roles to our Relayer. In our case the World calls the Relayer by proxy so we grant the role to the world address (not safe in the real world :P).

async function grantRelayerRoles(account: string) {

const rpcUrl = `http://127.0.0.1:8545`;

const provider = new JsonRpcProvider(rpcUrl);

// These are the join/exit/swap roles for Vault

const roles = ["0x1282ab709b2b70070f829c46bc36f76b32ad4989fecb2fcb09a1b3ce00bbfc30", "0xc149e88b59429ded7f601ab52ecd62331cac006ae07c16543439ed138dcb8d34", "0x78ad1b68d148c070372f8643c4648efbb63c6a8a338f3c24714868e791367653", "0xeba777d811cd36c06d540d7ff2ed18ed042fd67bbf7c9afcf88c818c7ee6b498", "0x0014a06d322ff07fcc02b12f93eb77bb76e28cdee4fc0670b9dec98d24bbfec8", "0x7b8a1d293670124924a0f532213753b89db10bde737249d4540e9a03657d1aff"];

// We impersonate the Balancer Governance Safe address as it is authorised to grant roles

await provider.send('hardhat_impersonateAccount', [governanceSafeAddr]);

const signer = provider.getSigner(governanceSafeAddr);

const authoriser = new Contract(authoriserAddr, authoriserAbi, signer);

const canPerformBefore = await authoriser.callStatic.canPerform(roles[0], account, balancerVaultAddr);

// Grants the set roles for the account to perform on behalf of users

const tx = await authoriser.grantRoles(roles, account);

await tx.wait();

const canPerformAfter = await authoriser.callStatic.canPerform(roles[0], account, balancerVaultAddr);

console.log(canPerformBefore, canPerformAfter);

}The World address is updated each time a change is made to contracts, etc so its useful to use a helper:

import worldsJson from "../../contracts/worlds.json";

export function getWorldAddress(): string {

const worlds = worldsJson as Partial<Record<string, { address: string; blockNumber?: number }>>;

const world = worlds['31337'];

if(!world) throw Error('No World Address');

return world.address;

}The Relayer must also be approved by the user who is executing the swap. In this case I select a user account that I know already has some funds and approvals for Balancer Vault. That account must call setRelayerApproval(account, relayer, true) on the Balancer Vault.

async function approveRelayer(account: string, relayer: string) {

const rpcUrl = `http://127.0.0.1:8545`;

const provider = new JsonRpcProvider(rpcUrl);

await provider.send('hardhat_impersonateAccount', [account]);

const signer = provider.getSigner(account);

const vault = new Contract(balancerVaultAddr, vaultAbi, signer);

const tx = await vault.setRelayerApproval(account, relayer, true);

await tx.wait();

const relayerApproved = await vault.callStatic.hasApprovedRelayer(account, relayer);

console.log(`relayerApproved: `, relayerApproved);

}In packages/helpers/src/balancerAuth.ts there’s a helper script that can be run using pnpm auth which handles all this and it should be run each time a new World is deployed.

Front End

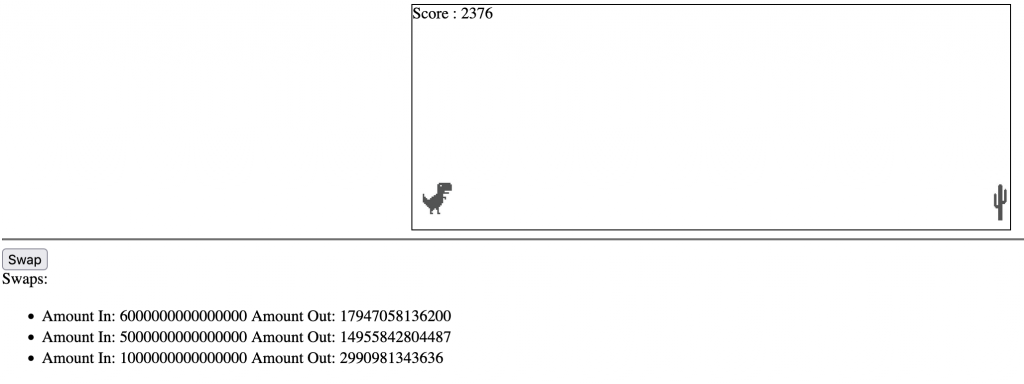

Disclaimer – my front-end UI is ugly and some of the code is hacky, but it works! The idea here was to just show a super simple UI that updates anytime a swap is made through our relayer.

To trigger a swap via the UI I’ve got a simple button wired up to a systemCall:

const worldSwap = async (poolId: string, assetIn: string, assetOut: string, amount: string) => {

const rpcUrl = `http://127.0.0.1:8545`;

const provider = new JsonRpcProvider(rpcUrl);

// Impersonates testAccount which we know has balances for swapping

await provider.send('hardhat_impersonateAccount', [testAccount]);

const signer = provider.getSigner(testAccount);

const singleSwap = {

poolId,

kind: '0',

assetIn,

assetOut,

amount,

userData: '0x'

};

const funds = {

sender: testAccount,

fromInternalBalance: false,

recipient: testAccount,

toInternalBalance: false

}

const limit = '0';

const deadline = '999999999999999999';

console.log(`Sending swap...`);

const test = await worldContract.connect(signer).swap(singleSwap, funds, limit, deadline, '0');

console.log(`Did it work?`)

};I took the approach of impersonating the test account that we previously setup the Relayer permission for to avoid the UX of approving, etc via the UI. We just submit the swap data via the worldContract which proxies the call to the Relayer.

To display the swap data from the Store I use the storeCache which is typed and reactive. A simplified snippet shows how:

import { useRows } from "@latticexyz/react";

import { useMUD } from "./MUDContext";

export const App = () => {

const {

systemCalls: { worldSwap },

network: { storeCache },

} = useMUD();

const swaps = useRows(storeCache, { table: "Swap" });

return (

<>

...

<div>Swaps:</div>

<ul>

{swaps.map(({ value }, increment) => (

<li key={increment}>

Amount In: {value.amount.toString()} Amount Out: {value.amountReturned.toString()}

</li>

))}

</ul>

</>

);

};(One other hack I had to make to get it working. In packages/client/src/mud/getNetworkConfig.ts I had to update the initialBlockNumber to 17295542.)

To demonstrate the reactive nature I also added another helper script that can be used to execute a swap with a random amount (see: packages/helpers/src/worldSwap.ts). This can be run using pnpm swap and its awesome to see the UI update automatically. I also really like the MUD Dev Tools which shows the Store updating.

Composability

I think one of the most exciting and original aspects of Autonomous Worlds is the opportunities for composability. With the standardisation of data formats in the MUD Store experimentation is made easier. As an extremely basic implementation of this I thought it was cool to show how the swap data could be used in another non-defi related app like a game. In this case I implemented the famous Google Dino hopper game where a cactus is encountered whenever a swap is made. We can import the swap data as before and trigger a cactus whenever a new swap record is added. (See packages/client/src/dino for the implementation).

Although basic, hacky and ugly it demonstrates how an Autonomous World of composable games, defi and data can start to develop. The really cool thing is who knows how it takes shape! MUD is a super cool tool and I’m excited to see it develop.